This document explains the structure of examiner quiz

and question files. The basic principle is that certain headings are

“magic” in that examiner will interpret what follows as

field values for quiz and question objects in the Canvas API or WISEflow

JSON format.

Overview

The question format was originally borrowed from

library(exams), the R package for the R/exams project. See, for example,

its introductory

tutorial.

examiner vs exams

Compared to exams, examiner supports more

question types and export to the WISEflow exam system. However,

exams targets a much wider selection of learning management

systems for output.

For Canvas, examiner uses the Canvas API rather than QTI

(question and

test interoperability) export files. This is mostly because the

learning management systems have notoriously spotty support for the QTI

format and do not provide verifiable examples of all question types and

features. With examiner, you can use the xhtml_features

template to verify what HTML elements you have at your disposal, and see

that they do indeed work for you too:

File > New file > R markdown > From template > xhtml_features

Plain text vs HTML

It is important to be aware that some parts of a question are limited

to plain text. For example, the answer options in a dropdown question

must be plain text, whereas the answer options in a multiple choice

question can be HTML. The parts that do offer HTML let you include any

element which is part of the QTI specification. For more details, see

the xhtml_features template mentioned above.

Quiz files

The main body of the quiz Rmd file becomes the quiz’s description, i.e. the introductory text shown to students before they start.

The YAML header of a quiz file needs to set

output_format to one of the examiner output formats.

Furthermore, the YAML header fields questions needs to

specify a list of Rmd file names for the question files, like so:

title: "An example quiz"

output_format: examiner::canvas

questions:

- first_question.Rmd

- second_question.RmdThis will work for examiner::wiseflow or

examiner::test_knit output too.

For Canvas output only, a field called

canvas_quiz_options can be used to specify additional quiz

properties as specified in the Quizzes

API docs.

For example, the following specifies the parameters documented as

quiz[quiz_type] and quiz[allowed_attempts].

(Note that you should not include the quiz[...] wrapper.)

Study the API docs for details on what parameters and values are

available.

title: "An example quiz"

output_format: examiner::canvas

questions:

- first_question.Rmd

- second_question.Rmd

canvas_quiz_options:

quiz_type: practice_quiz

allowed_attempts: -1Question files

As I implemented question types beyond those supported by

exams, I invented new conventions as I went along, for

example how to define student feedback for each specific answer

option.

The pieces of a question are parsed from the HTML produced by

rmarkdown::render(output_format = "html_fragment"). Thus,

it does not matter if you use # Question or “Question”

underlined with ======== to create the level 1 Question

header.

multiple_choice_question (minimal template)

The following headings are mandatory:

# Question: Level 1 heading. Everything between there and Answerlist becomes the statement of the question, often called the “stimulus”. This can be HTML, which allows for images, video, math, and more.## Answerlist: Level 2 heading under Question. The content under this heading must be a bulleted list. Each item defines an answer option and can be HTMl.-

# Meta-information: Level 1 heading. This contains one or more lines of plain text in a format adopted fromlibrary(exams). For example:extype: multiple_choice_question exsolution: 100 expoints: 3This specifies that the question type is multiple choice, that the first of the three answer options is the correct one, and that the question is worth three points (default 1 point).

I slightly regret adopting this convention because it does not play nice with RStudio’s “canonical markdown” syntax, which will run the lines together. Also, the “ex”-everything syntax is clunky. But for now it’s what I have.

Here is the multiple_choice_question template available

from

File > New file > R markdown > From template.

Note: There can be more than one correct answer to a multiple choice question.

This lets you mix in survey-like questions like “What operating

system do you use”, awarding points to Windows, Mac and Linux alike.

Very useful for getting to know your audience. Importantly, you

must use the API (e.g. via library(examiner)) to

set multiple correct answers. Editing such a question with the GUI will

keep only the first correct answer as correct.

multiple_choice_question (showcasing student-specific

feedback)

Below is the “multiple_choice_question with feedback”

template available from

File > New file > R markdown > From template. It

showcases the optional features for student-specific feedback. It also

includes an explanation of the format before the Question header.

Note: All auto-scored question types can utilize

correct_comments, incorrect_comments and

neutral_comments.

multiple_answers_question

This format is exactly like multiple choice question. The display is different in that students can tick more than one answer option.

short_answer_question = Fill In the Blank

Each Question/Answerlist item defines an accepted answer. A student who answers with any of those will score.

Optional Solution/Answerlist items specify feedback per answer.

fill_in_multiple_blanks_question = Fill In Multiple

Blanks

The “blanks” placeholders are marked in the question text by square brackets, and the name within the brackets identifies each placeholder.

So, the structure of each Answerlist item below is:

- name of placeholder

- accepted answer written by student

- feedback if this is the student’s answer

- accepted answer written by student

multiple_dropdowns_question = Multiple Dropdowns

Placeholders are marked in the question tekst as in the previous question type.

The exsolution meta-information states which answer

alternatives are correct, using a string of zeros (incorrect), ones

(correct), and spaces (separating placeholders). For example,

100 101 means that for placeholder 1 its first alternative

is correct, while for placeholder 2 its first and third alternatives are

both correct.

The structure of each Answerlist item is:

- name of placeholder 1

- possible answer 1.1

- feedback if answer 1.1 is chosen

- possible answer 1.2

- feedback if answer 1.2 is chosen

- etc

- possible answer 1.1

- name of placeholder 2

- possible answer 2.1

- feedback if answer 2.1 is chosen

- etc

- possible answer 2.1

matching_question = Matching

The structure of each Answerlist item is:

- text of left side item 1

- matching right side item

- feedback if left side item is incorrectly matched

- matching right side item

- text of left side item 2

- matching right side item

- feedback if left side item is incorrectly matched

- matching right side item

- etc

- DISTRACTORS (literally the text “DISTRACTORS”)

- right side item which matches nothing

- another right side item which matches nothing

- etc

numerical_question = Numerical Answer

An Answerlist item defines a correct answer. There can be more than one correct answer.

A correct answer can be specified as “exact”, “range” or “precision”. In the example below, the first two are “exact”, the third is a “range” (inclusive), the fourth is “exact” but with an accepted margin, and the fifth is “precision” with two significant digits.

examiner will export a numerical_question

to WISEflow as a “Fill in maths” question with a single response

placeholder below the question text.

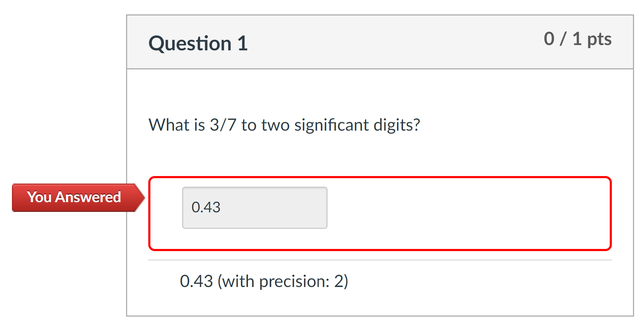

Canvas “precision” is buggy!

Unfortunately, the Canvas implementation of “precision” is buggy: It does a character-based comparison of the significant digits.

So if you ask for an answer of 3/7 = 0.4285714…, 0.43 will not be accepted, because the “3” differs from the “2” in the string representation. To add insult to injury, Canvas will inform you that you should indeed have answered 0.43 [sic]:

A workaround is to construct a range answer allowing for roundoff error. I usually also include the exact answer for feedback.

So, the Answerlist might look like this:

* `r 3/7`

* `r round(3/7, 2) - 0.005` - `r round(3/7, 2) + 0.005`rendering as

* 0.4285714

* 0.425 - 0.435and giving helpful feedback:

fill_in_multiple_numerics_question ≈ Fill in maths

This is a WISEflow-only format which is like

numerical_question, but with multiple placeholders. Such

questions are not possible in Canvas Classic Quizzes. On the other hand,

WISEflow offers many other kinds of math questions which are not

supported by examiner.

For now, only a single answer can be specified for each placeholder, but they can be exact, range, with margin, or precision. (The latter two are implemented as a corresponding range answer.)

The Answerlist must specify the placeholders in order of appearance.

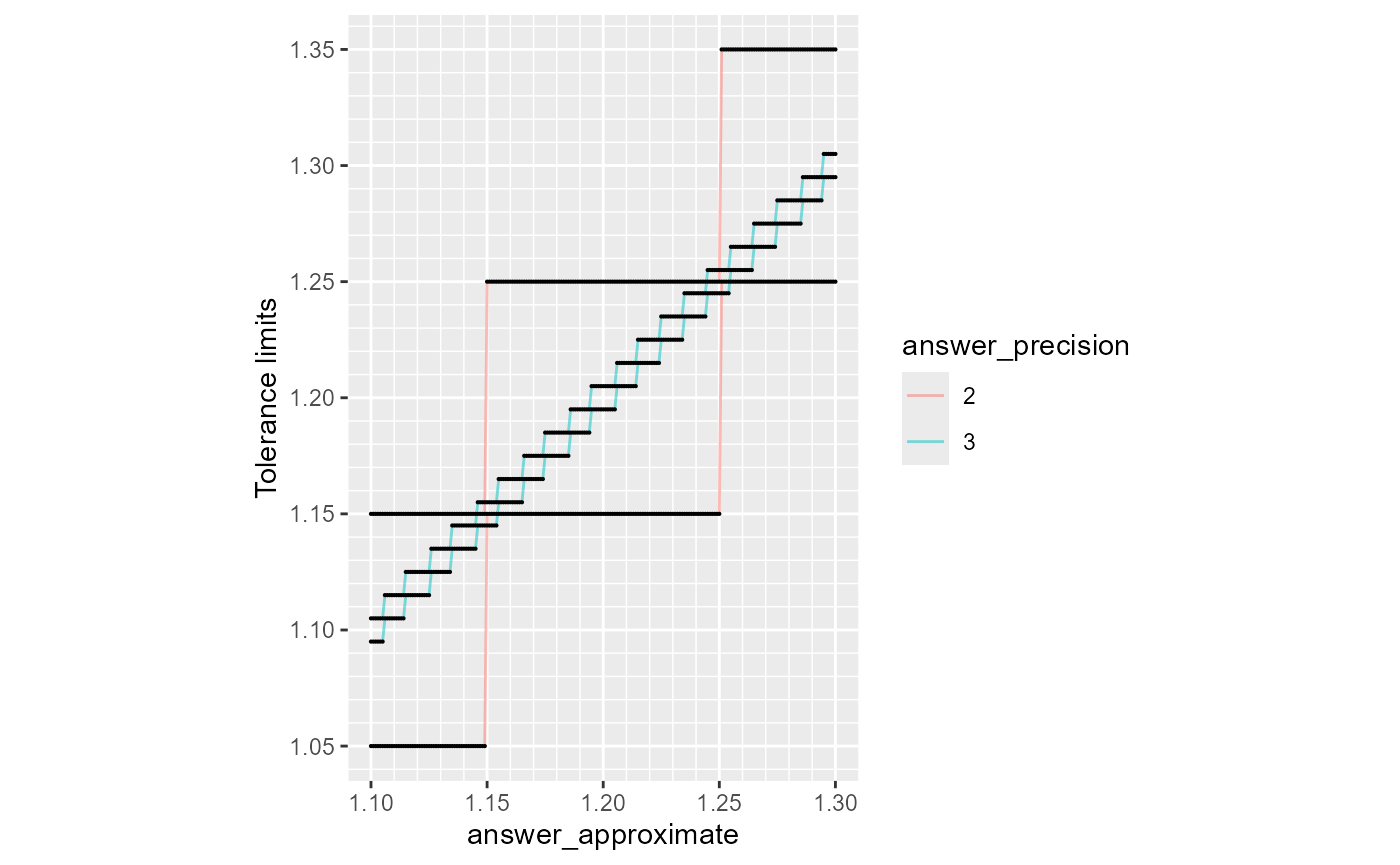

“Precision” is implemented differently than Canvas:

- Round the “correct” answer to the desired number of significant

digits using

signif(). - Add a tolerance of plus/minus half a digit at that position.

This can be tricky to wrap your head around. Make sure to test, and maybe the illustration below is helpful.

Also be aware that R prints with a limited number of decimal places, which may differ from the Console to code chunks to inline R code in R Markdown documents.

essay_question = Essay Question

An essay question does not have a fixed answer and therefore has no Answerlist.

You might still use a # Solution section to provide

feedback to students after they submit the quiz by having a

## neutral_comments subsection.

file_upload_question = File Upload Question

An file upload question cannot be auto-scored and therefore has no Answerlist.

You might still use a # Solution section to provide

feedback to students after they submit the quiz by having a

## neutral_comments subsection.

text_only_question = Text (no question)

The Question section will be shown to students for information purposes. There is no way to respond.

true_false_question = True/False

This question type is not implemented in examiner, as

you can easily achieve the same with a

multiple_choice_question.

calculated_question = Formula Question

This question type, offering randomized numerical exercises, is not

implemented in examiner.

You can achieve a similar effect by using random text or numbers in

your Rmd question files and including the same question file multiple

times in the questions list in the YAML header, or making a

question bank.